In a recent partnership with Stanford’s Deliberative Democracy Lab, Meta conducted a community forum on generative AI to gather feedback from actual users on their expectations and concerns around responsible AI development. Over 1,500 people from Brazil, Germany, Spain, and the United States participated in the forum, shedding light on key issues and challenges in AI development.

The forum revealed that the majority of participants from each country believe that AI has had a positive impact. Additionally, most participants agree that AI chatbots should be able to use past conversations to improve responses, as long as users are informed. There is also a shared belief among participants that AI chatbots can exhibit human-like behavior, given proper disclosure.

Interestingly, the responses to certain statements varied by region, indicating differing opinions on the benefits and risks of AI. While many participants changed their views throughout the discussion, the regional differences highlight the complex nature of public perception towards AI development.

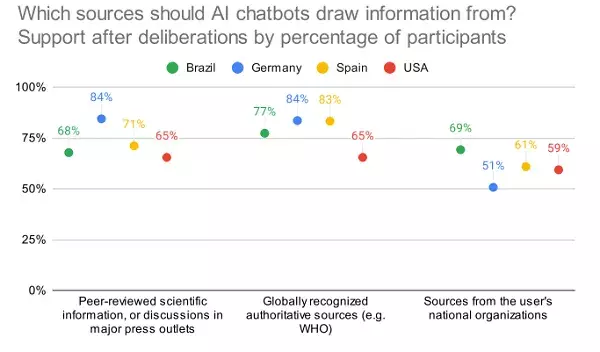

The report also explored consumer attitudes towards AI disclosure and the sources from which AI tools should gather information. Surprisingly, there was relatively low approval for these sources in the U.S., pointing towards a need for increased transparency and accountability in AI development.

One notable ethical consideration not directly addressed in the study is the influence of controls and weightings within AI tools. For instance, recent controversies surrounding Google’s Gemini system and Meta’s Llama model underscore the importance of ensuring diverse and balanced representations in AI outputs. The question of whether corporations should have significant control over these tools raises concerns about bias and fairness in AI development.

While many questions surrounding AI development remain unanswered, it is evident that universal guardrails are necessary to protect users from misinformation and misleading responses. The debate on responsible AI development calls for broader regulation to ensure equal representation and ethical use of AI tools. As the capabilities of AI continue to evolve, it is crucial to establish guidelines that prioritize user safety and ethical standards.

The ongoing debate around responsible AI development highlights the need for collaborative efforts to address the ethical implications of AI technology. By considering public feedback, regional variations, and ethical considerations, stakeholders can work towards creating a more transparent and equitable AI landscape. As the field of AI continues to advance, it is essential to prioritize ethical principles and regulatory frameworks that safeguard users and promote responsible innovation.

Leave a Reply