The unauthorized scraping of over 170 images and personal details of children from Brazil for the purpose of training AI has raised serious ethical concerns. These images were collected without the knowledge or consent of the children involved, showcasing a blatant violation of their privacy rights. The dataset, known as LAION-5B, used content dating back to the mid-1990s, long before internet users could anticipate such use of their images. This act not only compromises the privacy of these children but also exposes them to potential exploitation.

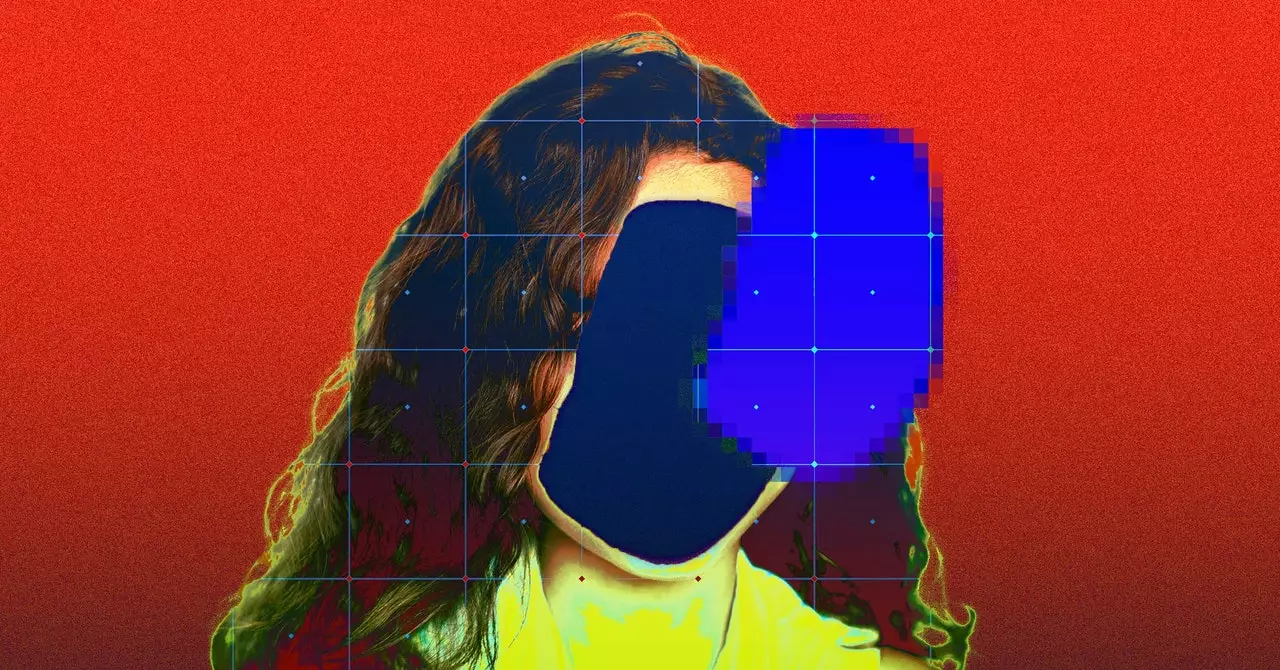

The AI models trained on datasets like LAION-5B have the capability to generate realistic imagery based on the information provided. This means that any child who has their photo or video online is at risk of having their image manipulated in ways they did not consent to. The implications of this technology go beyond mere image generation, as it can lead to the creation of deepfakes and other malicious content that can harm individuals, especially children.

The images of children used in the LAION-5B dataset were sourced from personal blogs and YouTube videos, where they were shared in what was perceived to be private settings. These children, along with their families, had an expectation of privacy when posting these images, making the unauthorized scraping of their content even more alarming. Many of these images were not easily accessible through a reverse image search, further emphasizing the violation of privacy that occurred.

Upon the discovery of these privacy violations, organizations like LAION have taken steps to address the issue. LAION-5B was taken down in response to reports indicating links to illegal content on the web. Collaboration with entities like the Internet Watch Foundation, Stanford University, and Human Rights Watch is underway to remove any references to illegal content from the dataset. However, the fact remains that the damage has already been done, and the potential implications of this breach of privacy are far-reaching.

The use of AI technology in the generation of explicit content, including deepfakes, poses significant risks to individuals, particularly children. Instances of bullying in schools and the potential for exploitation of sensitive information, such as locations or medical data, highlight the urgent need for ethical considerations in the development and application of AI tools. The breach of YouTube’s terms of service through unauthorized scraping further underscores the legal and ethical challenges posed by the use of personal data in AI training.

The case of the LAION-5B dataset serves as a stark reminder of the ethical dilemmas surrounding the use of children’s images in AI training data. The need to prioritize data privacy, consent, and ethical standards in the development and deployment of AI technologies cannot be understated. It is imperative for organizations, researchers, and policymakers to work together to establish clear guidelines and safeguards to protect individuals, especially children, from potential harm and exploitation in the digital age.

Leave a Reply