In the rapidly evolving world of artificial intelligence, enhancing the accuracy and efficiency of algorithms is of paramount importance. One innovative approach to achieving this goal comes from the Massachusetts Institute of Technology’s (MIT) Computer Science and Artificial Intelligence Laboratory (CSAIL). Researchers there have developed an algorithm known as Co-LLM, which redefines how large language models (LLMs) can collaborate. This article explores the nature of Co-LLM, its operational mechanics, and its potential implications for various fields.

The Paradigm Shift in Language Model Collaboration

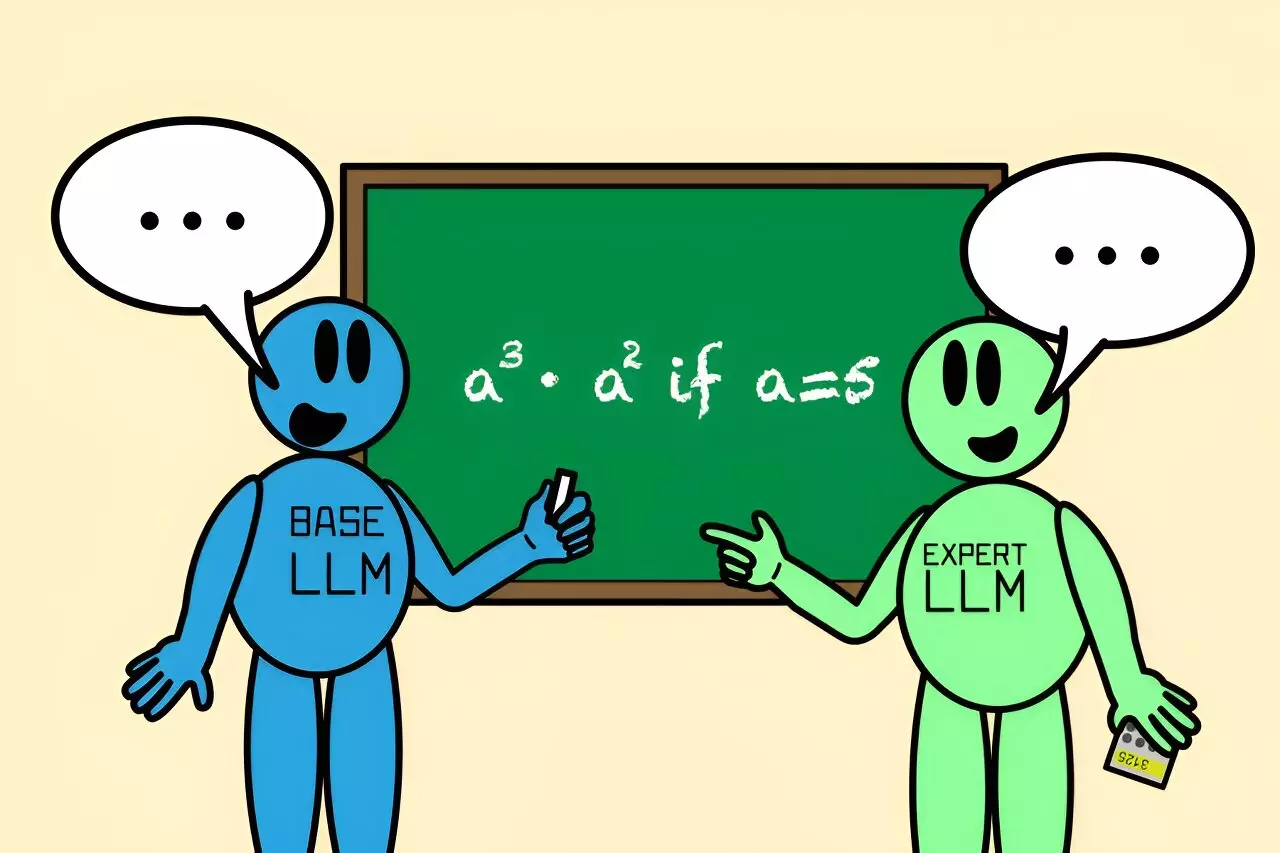

Traditionally, training LLMs has involved substantially complex methods, relying on extensive datasets and precise formulas for improvement. However, many existing models struggle with recognizing their moments of inadequacy, resulting in subpar outputs. MIT’s Co-LLM serves as a refreshing departure from this norm by employing a more organic system of interaction between general-purpose and specialized models. In essence, Co-LLM facilitates a collaboration similar to how humans consult specialists when confronted with complex inquiries, thus fostering a more seamless interactive framework.

At its core, Co-LLM allows a general-purpose LLM to generate responses while simultaneously evaluating its accuracy. The algorithm intelligently identifies points in the text that require specialized knowledge and deftly integrates input from an expert model when necessary. This ensures the output is not only faster but also considerably more accurate. By streamlining this process, Co-LLM shifts the focus from a rigid, formulaic approach to a more adaptable and efficient cooperative model.

How Co-LLM Operates: A Technical Overview

The mechanics behind Co-LLM hinge on a “switch variable,” a machine-learning-derived tool that evaluates the competence of each word in the context of both the general-purpose and expert models. This switch functions much like a project manager, overseeing the integration of specialized input when needed. For example, if tasked with naming extinct bear species, the general-purpose model begins formulating the response, while the switch variable identifies which portions of the output would benefit from the expert model’s precision. The result is a melding of general understanding and niche expertise, vastly improving the quality of the response.

The algorithm showcases its versatility by adapting to various domains, such as medicine or mathematics. The researchers have successfully combined a base LLM with specialized models, like Meditron for medical inquiries, where nuances matter greatly. Additionally, Co-LLM encourages the general model to understand and learn from the more expert model’s strengths. As it interacts, it naturally develops patterns of collaboration, much like how humans recognize the need for expert assistance.

The outcome of utilizing Co-LLM can be striking. In experiments, when Co-LLM was tasked with answering complex questions, its integration with specialized models often outperformed solitary LLMs that had not undergone fine-tuning. For instance, in a mathematical exercise, a general-purpose model initially miscalculated, but through Co-LLM’s collaboration with a specialized model focused on arithmetic reasoning, the final, correct answer emerged. These improvements exemplify why Co-LLM is considered a significant leap forward in the domain of LLM applications.

Moreover, Co-LLM’s potential applications extend into various fields, from clinical research to education, where accurate responses are critical. By maintaining their cooperative dynamic, LLMs could conceivably work together to generate more reliable and relevant outputs continuously.

Future Implications and Applications of Co-LLM

As promising as Co-LLM is, the team at MIT has ambitious plans for its further development. One key enhancement under consideration is the implementation of a robust deferral approach that can allow the algorithm to backtrack when the expert model does not provide the correct data. This adaptability could lead to a self-correcting cycle of information, ensuring that the model can still deliver reasonable answers even when it encounters uncertainty.

Furthermore, continuous updates to the expert model could keep the LLMs aligned with the latest knowledge and developments across various industries. For example, in the realm of medicine, timely access to advancements or new findings could significantly impact treatment protocols and patient care.

The overarching goal of Co-LLM is to evolve into a versatile tool that can aid in compiling enterprise documents, refining content accuracy, and ensuring the information remains up-to-date. The potential for Co-LLM to train smaller, proprietary models that retain sensitive data while leveraging the expertise of a larger, more powerful LLM is another exciting prospect.

MIT’s Co-LLM represents a notable shift in how we approach the collaboration between language models. By embracing a more human-like interaction between general and specialized models, we can enhance the overall performance, accuracy, and efficiency of AI-generated outputs. As researchers continue to refine this groundbreaking algorithm, the implications for healthcare, education, and numerous other fields could be transformative, marking a significant step forward in the journey toward more intelligent, responsive AI systems.

Leave a Reply