In a groundbreaking study utilizing data from a 2002 game show, Xunyu Chen, an assistant professor at Virginia Commonwealth University, has managed to train a computer to identify signs of lying in individuals. By delving into the wealth of deception and trust cues present in human behaviors, Chen highlights the potential of artificial intelligence techniques, including machine learning and deep learning, in leveraging this information for effective decision-making.

Chen and his research team delve into high-stakes deception and trust from a quantitative standpoint in their paper titled “Trust and Deception with High Stakes: Evidence from the ‘Friend or Foe’ Dataset”, recently published in Decision Support Systems. Drawing from a unique dataset originating from the American game show “Friend or Foe?”, which revolves around the principles of the prisoner’s dilemma, the study sheds light on the complexities of cooperation and betrayal. Unlike traditional lab experiments, which may lack realism and generalizability, high-stakes scenarios such as those depicted in game shows like “Friend or Foe?” require heightened cognitive abilities to manage deceptive behaviors effectively.

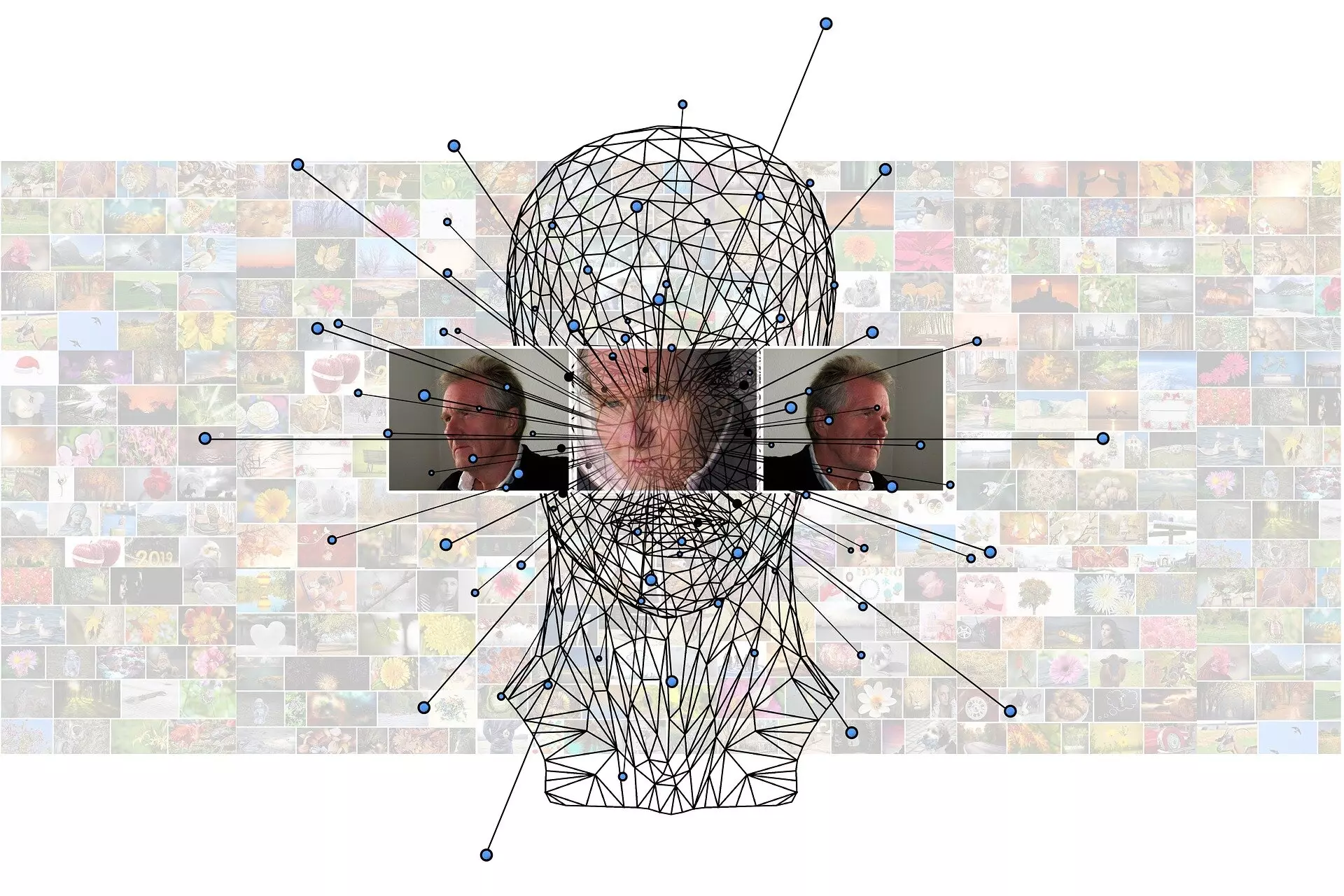

The research conducted by Chen and his team unveils the presence of multimodal behavioral cues linked to deception and trust in situations involving high-stakes decision-making. These cues, encompassing facial expressions, verbal patterns, and movement fluctuations, play a pivotal role in predicting deception accurately. Chen introduces the concept of an automated deception detector, capable of discerning deceptive behaviors with remarkable precision. The implications of this research extend beyond academia, offering valuable insights for professionals in diverse fields, such as political debates, corporate negotiations, and legal proceedings.

By unraveling the intricate dynamics of deception and trust behaviors in high-stakes settings, Chen’s research paves the way for a deeper understanding of human interactions with profound consequences. The ability to anticipate deception through behavioral analysis opens up possibilities for preemptive action in critical scenarios where self-interest is paramount. Researchers and practitioners alike can leverage the findings from this study to navigate complex social landscapes, enabling them to make informed decisions based on behavioral cues that may otherwise go unnoticed.

The fusion of artificial intelligence with behavioral analysis offers a compelling avenue for enhancing decision-making processes in contexts characterized by deception and trust. By harnessing the power of technology to decipher human behaviors, researchers like Xunyu Chen are shaping a future where deception may be detected with unprecedented accuracy, revolutionizing our approach to interpersonal interactions and strategic decision-making.

Leave a Reply