Deep learning has steadily emerged as a transformative force across various sectors, from healthcare to finance. It enables unparalleled capabilities, such as diagnosing diseases and predicting market trends by leveraging massive amounts of data and computational power. However, this surge in demand for deep learning models brings with it significant challenges, chiefly regarding data security and privacy. Specifically, the reliance on cloud computing systems to process complex algorithms raises apprehensions, particularly for sensitive applications like medical diagnostics, where patient confidentiality is paramount. Hospitals and clinics are often hesitant to adopt these advanced technologies, fearing that vital patient information could be compromised during transmission and storage in the cloud.

To address these security concerns, a team of researchers from the Massachusetts Institute of Technology (MIT) has pioneered an innovative security protocol that harnesses the unique properties of quantum mechanics. This groundbreaking approach ensures that data transmitted to and from cloud servers remains secure while still allowing the extensive computational capabilities necessary for deep learning applications. By encoding data into laser light within fiber optic communication systems, the protocol utilizes the principles of quantum mechanics—specifically, the no-cloning theorem. This feature guarantees that any attempts to intercept or duplicate the data will inevitably lead to detection, thus fortifying the overall security of the system.

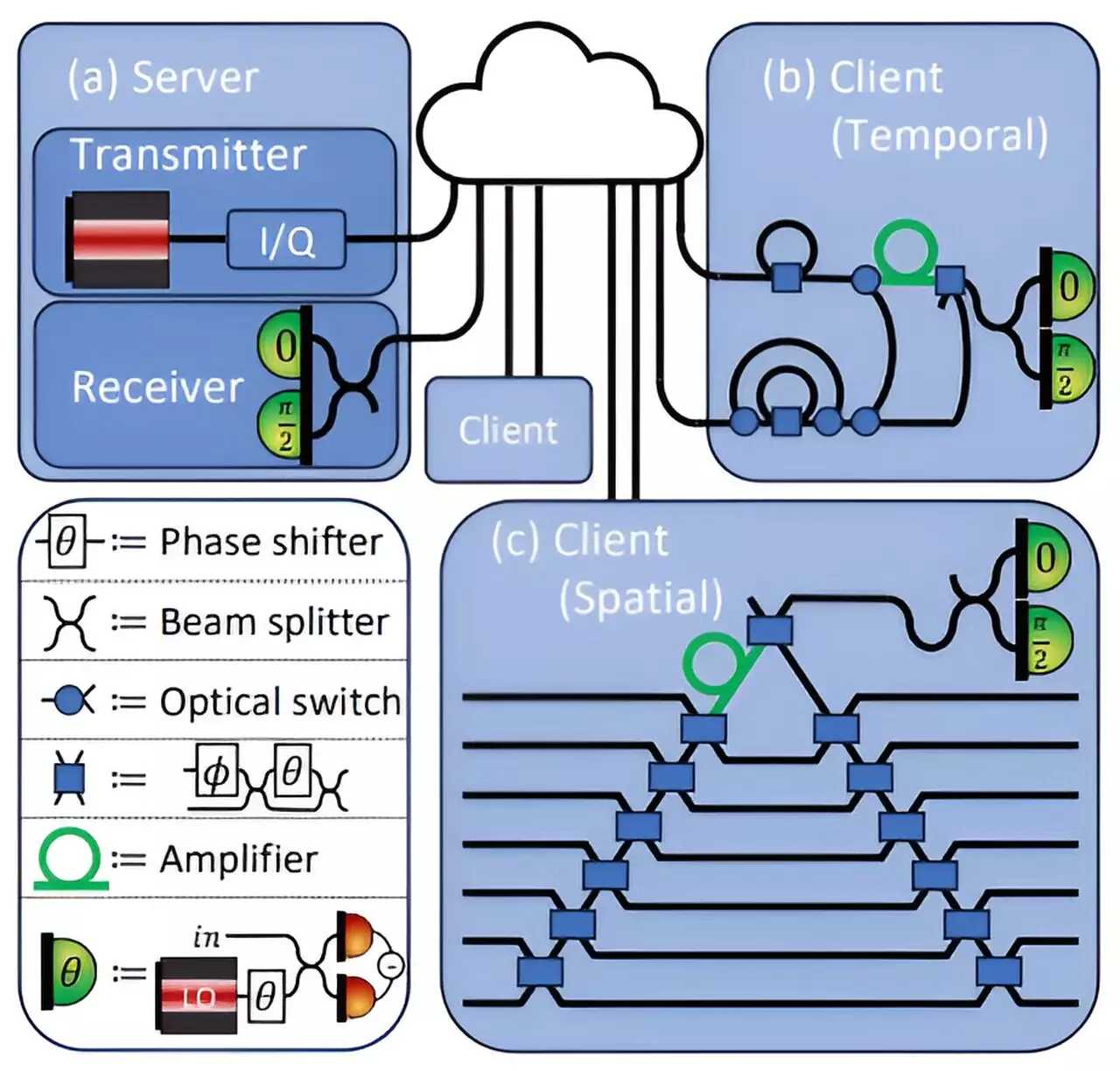

The proposed security mechanism engages two parties in a cloud-based computation environment: the client, typically a healthcare provider with sensitive data such as medical imaging, and the central server, which houses the deep learning model utilized for analysis. The objective is to glean valuable insights without compromising patient confidentiality or exposing proprietary model information. In this landscape, both the server and the client have vested interests in maintaining their respective data’s secrecy.

Central to the protocol’s operation is the encoding of the neural network’s parameters into an optical field via laser light. The neural network consists of a series of layers filled with interconnected nodes or neurons that sequentially process input data. The weights represent the mathematical functions applied at each layer. When transferring these weights to the client, the system is designed to discourage unauthorized data copying while powering the neural network computations. Significantly, the light transmitted facilitates measuring the model’s output without exposing the client’s private data or broader model architecture.

One of the protocol’s critical achievements is the ability to maintain deep learning model accuracy—up to an impressive 96%. While transmitting and computing results, the model can simultaneously guard against potential data leaks. When the client performs operations on the weights provided by the server, minute errors are introduced, which the server can subsequently analyze to ensure no sensitive information has leaked.

In practical applications, the protocol provides a two-way assurance of security, whereby any attempt at eavesdropping is rendered ineffective. A malicious actor trying to intercept data would obtain only a minimal fraction of the required information to compromise the client’s data or the server’s model. This dual-layer security mechanism positions the protocol as a viable solution for enhancing data privacy in cloud-based deep learning strategies.

Challenges and Future Directions

Despite the significant strides made by the MIT researchers, challenges persist in the broader application and optimization of their security protocol. Future work aims to adapt the framework for federated learning, where multiple clients collaborate on training a shared model while maintaining their data’s privacy. The implications of such adaptations could revolutionize collaborative machine learning without necessitating data centralization, thereby reducing security risks.

Moreover, researchers aspire to explore quantum computing applications, delving deeper into how quantum operations could amplify both accuracy and security in data processing environments. As discussions evolve regarding the merging of deep learning with quantum cryptography, new vistas of innovation could emerge, benefiting a myriad of sectors reliant on secure data transmission.

A Bright Future for Secure AI

The confluence of deep learning and quantum mechanics showcases profound potential for addressing an increasingly pressing issue: data privacy in the age of technology. With innovative protocols that empower secure computation, organizations—from healthcare professionals to financial institutions—can harness advanced AI capabilities without fearing for the confidentiality of their sensitive data. As researchers continue to refine and test these methods, the future of secure AI looks promising, ensuring that critical advancements can be achieved responsibly and with the highest regard for privacy.

Leave a Reply