In an era where advancements in artificial intelligence (AI) are both increasing capability and sophistication, a new kind of threat looms over the digital landscape: AI-driven fraud. With the proliferation of technology that can create hyper-realistic content, such as deepfakes and synthetic media, concerns regarding trust and authenticity in online information have escalated dramatically. A significant number of professionals within various industries—especially corporate finance—have identified AI scams as a critical risk, with recent reports suggesting that more than half have already encountered deepfake technologies firsthand. This growing trepidation has ignited a collective urgency for innovative solutions to combat misinformation and fraud.

AI or Not, a trailblazer in the realm of AI fraud detection, has recently garnered attention after securing $5 million in seed funding. This financial boost, led by Foundation Capital and supported by investors like GTMFund and Plug and Play, is intended to enhance the company’s capabilities in detecting AI-generated content. The necessity for such advancements is underscored by projections that indicate generative AI scams could result in losses exceeding $40 billion in the United States within the next two years—a staggering figure that reflects the potential for widespread harm.

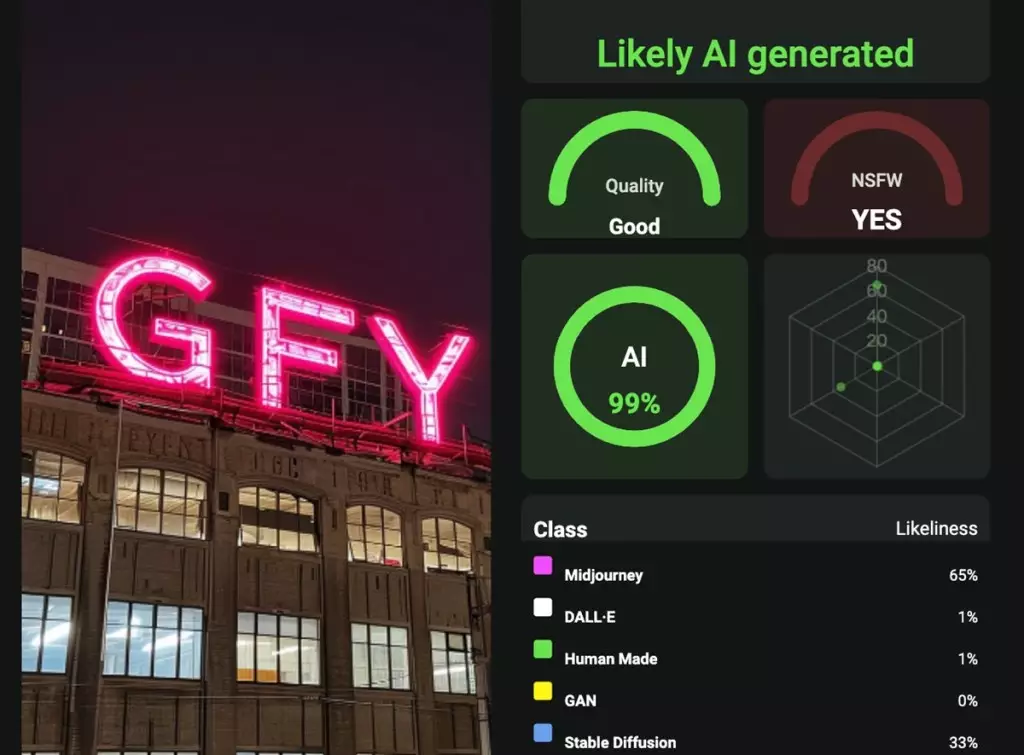

AI or Not employs proprietary algorithms designed to meticulously analyze and verify the authenticity of various content types, encompassing images, audio, video, and deepfakes. Their platform is engineered to spot nefarious exploits—such as AI-generated impersonations of public figures or misleading synthetic content—which can fundamentally undermine public trust. With over 250,000 users to date, the startup stands at the forefront of addressing the complexities associated with digital verification, positioning itself to be a safeguard against fraud and deception.

Moreover, the urgency for transparency in an age dominated by digital interactions cannot be overstated. Recent public backlash against tech giants like Meta serves as a poignant reminder of the high demand for trustworthy online content. Consumers, organizations, and even governments are increasingly recognizing the importance of reliable information, fueling the need for AI detection technologies that can offer assurance amid rising misinformation.

The recent funding acquired by AI or Not is pivotal for the company’s future trajectory. The financing allows for the development of more sophisticated tools capable of keeping pace with the evolving landscape of digital threats. The company aims not just to build a robust defense against existing threats, but to proactively anticipate and counteract potential future challenges posed by deceptive AI technologies. As stated by Zach Noorani of Foundation Capital, the support behind AI or Not is emblematic of a larger movement towards safeguarding digital integrity amid advancements that complicate authenticity.

Anatoly Kvitnisky, the CEO of AI or Not, emphasizes the dual nature of generative AI: while it has unlocked extraordinary potential, it has simultaneously introduced vulnerabilities for individuals and organizations. The mission of AI or Not resonates with a wider audience—the urgency to create a safe digital ecosystem where technology enhances rather than compromises authenticity.

AI or Not’s approach reflects a burgeoning recognition that combating AI-fueled misinformation is a collective responsibility. As users and institutions grapple with the fallout of AI misuse, platforms providing innovative, real-time detection tools become indispensable. By deploying AI-powered solutions to identify fraudulent acts and deepfakes proactively, AI or Not aims to uphold the principles of trust and integrity within the digital sphere.

As the landscape shifts and evolves, the focus on transparency and verification in digital content will become increasingly crucial. Initiatives such as AI or Not embody the proactive measures necessary to protect organizations and individuals from the associated risks of an AI-saturated world. The road ahead will require collaboration among stakeholders to anticipate risks effectively, creating a more secure future shaped by responsible AI usage.

Leave a Reply