In recent years, advancements in artificial intelligence (AI) have been nothing short of revolutionary, yet many robots still fall short in terms of true intelligence and adaptability. Most industrial robots today are highly specialized machines limited to performing specific tasks in meticulously sequenced operations. These robots lack a significant understanding of their environment, making them rigid and inflexible when faced with unexpected changes or challenges. While there have been strides in creating robots that can perceive and manipulate objects, their dexterity remains restricted, highlighting a critical deficit in overall physical intelligence.

The Need for Versatility in Robotics

In order to unlock the full potential of robotics, machines must evolve beyond mere programs that execute pre-defined routines. More versatile robots capable of handling a broader scope of tasks with minimal guidance are essential for both industrial applications and domestic environments. The increasing complexity of everyday tasks at home demands robots that can learn and adapt quickly to ever-changing circumstances. Optimism surrounding the advancements in AI has spurred interest in the field of robotics, with innovators like Elon Musk making headlines by announcing the development of a humanoid robot, Optimus. This robot is touted to be available by 2040, claiming a price range of $20,000 to $25,000, and would purportedly carry out a plethora of tasks.

Historically, the approach to teaching robots has been rather restrictive, focusing on one machine mastering a single task without the ability to transfer that knowledge to diverse applications. However, recent academic investigations indicate that with the right scale and adjustments, such learning can indeed be transferred among various tasks and robots. For instance, Google’s 2023 project Open X-Embodiment allows for collaborative learning across multiple research labs, with 22 distinct robots sharing knowledge from 21 different environments. This collaborative approach presents an opportunity for robots to learn in much more dynamic and diverse ways.

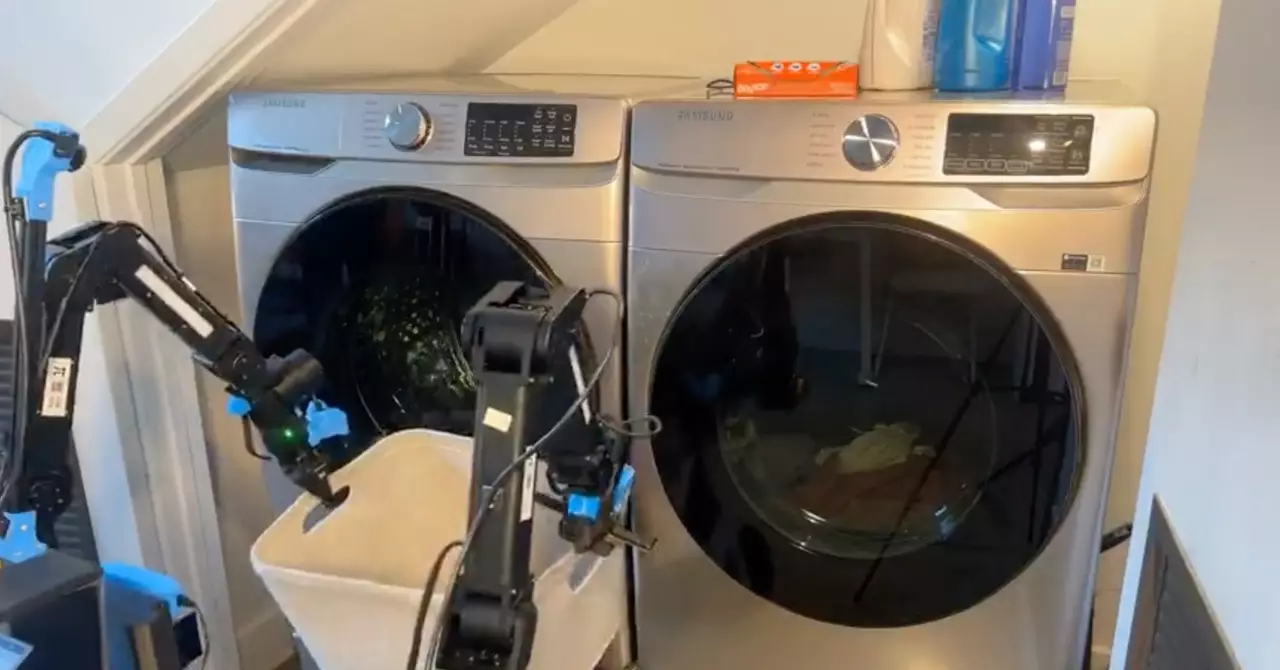

One of the fundamental challenges confronting robotics today is the significant disparity in data availability for training compared to AI models that rely on text processing. Many companies are now compelled to create their own datasets in order to improve the learning capacities of their robots. For example, the company Physical Intelligence has devised a novel approach by integrating vision-language models—those trained on both images and text—combined with diffusion modeling derived from AI image generation. This innovative method aims to facilitate a more generalized learning experience for robots, which, if successful, could drastically elevate their capabilities.

Despite notable progress in robotic intelligence, the aspiration for robots to undertake any task asked of them remains a distant goal. The current experiments in scaling up learning methodologies are merely introductory steps toward a future where robots are not only tools but true partners in work and life. As experts acknowledge, we are at a crucial juncture where foundational frameworks—akin to scaffolding—are being built, suggesting promising advancements on the horizon. However, the journey to fully autonomous and intelligent robots is still rife with challenges and will demand persistent efforts in both research and innovation.

Leave a Reply