Meta Platforms, Inc., the tech giant formerly known as Facebook, is once again navigating the choppy waters of facial recognition technology. This comes as it tests new security measures that aim to address the persistent issue of scams using images of famous personalities. By deploying facial recognition to combat deceptive practices, the company finds itself threading the needle between enhancing user safety and facing potential public backlash over privacy concerns.

The internet has given rise to numerous scams targeting unsuspecting users, with “celeb-bait” being a particularly insidious form. In this scheme, fraudsters exploit the likeness of public figures to draw people into fraudulent advertisements, which often lead to malicious websites. Meta is testing a facial matching process that identifies ads utilizing images of celebrities and checks the legitimacy of these promotions against the celebrities’ official profiles. If Meta’s systems detect what they believe to be a scam ad featuring a recognizable face, they will cross-verify this data to confirm whether the celebrity has endorsed the advertisement.

As described by Meta, “If our systems suspect that an ad may be a scam that contains the image of a public figure at risk for celeb-bait, we will try to use facial recognition technology to compare faces in the ad to the public figure’s Facebook and Instagram profile pictures.” This indicates a proactive approach towards filtering out scams; yet, it also raises a key question: what happens to the biometric data that’s tracked in the process?

Meta’s foray into facial recognition is not without blemishes. The company faced intense scrutiny in previous years, leading to the discontinuation of its facial recognition features in 2021. Privacy advocates raised alarms about the potential misuse of facial data, urging greater regulation of such technologies. The public backlash surrounding this earlier discontinuation serves as a cautionary tale as Meta cautiously reintroduces facial recognition, albeit in a limited capacity.

While testing the facial-matching feature for ad verification, Meta assures users that all facial data collected will be deleted immediately after use. They emphasize that this data will not be stored for future analyses or purposes, echoing a desire for accountability. However, the potential for abuse, even with stringent guidelines, remains a primary concern among both users and regulators.

Global Implications of Facial Recognition Technology

Facial recognition is utilized across the globe with varying degrees of acceptance and ethical considerations. In countries like China, the technology has been deployed for heavily surveilled state control—catching jaywalkers, imposing fines, and tracking contentious groups like the Uyghur Muslims. The divergences in facial recognition usage highlight significant ethical issues, particularly when it comes to human rights. These examples accentuate the Western perspective that urges scrutiny and caution towards deploying such technologies.

As Meta navigates its second wave of facial recognition, the company’s efforts may come under intense public and regulatory scrutiny. While the applications of facial recognition can lend value from a security perspective, it is imperative to address the ethical ramifications attached to biometric data collection.

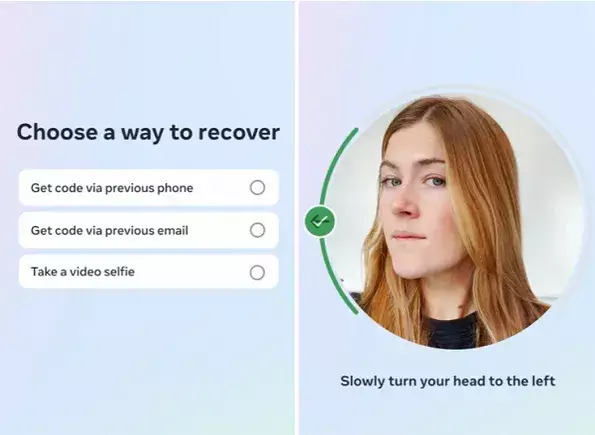

In an additional experiment, Meta is exploring the use of video selfies for identity verification when users attempt to regain access to compromised accounts. Users will need to upload a video selfie that will subsequently be verified against profile pictures. This feature aims to bolster user security, paralleling similar technologies used by mobile device unlock systems.

Meta claims the video selfies will be encrypted and never visible on profiles, again stressing that any data gathered will be promptly deleted after verification. This feature appears to fit within the broader trend of securing digital accounts against unauthorized access, but it still carries risks and implications for privacy.

Meta’s renewed engagement with facial recognition technology undoubtedly raises questions about the balance between enhancing security and protecting user privacy. While the company articulates a clear framework for the responsible use of facial recognition in limited scenarios, skepticism continues to loom over its operational fidelity.

As Meta embarks on this ambitious journey, it faces an uphill challenge in assuaging the worries of users and regulators alike. The calls for greater transparency regarding how it processes and stores biometric data, along with ethical implications related to surveillance, will likely continue unabated. As such, Meta may have to navigate this intricate landscape meticulously to validate its intentions and ensure user trust amidst its evolving security measures.

The road ahead is fraught with potential pitfalls, but should Meta successfully manage these crucial discussions around security and privacy, it may very well redefine its approach to user safety and trust in the digital landscape.

Leave a Reply