In recent years, large language models (LLMs) such as GPT-4 have emerged as transformative forces in the realm of artificial intelligence. These models perform a range of tasks, including text generation, translation, and conversational AI interfaces like chatbots, fundamentally altering how we interact with technology. At their core, LLMs operate based on the simple yet profound principle of predicting the next word in a sequence, as determined by the context provided by preceding words. However, an intriguing phenomenon has come to light, revealing that these models excel significantly at predicting the future (or subsequent words) compared to their performance in predicting the past (or preceding words). This “Arrow of Time” effect holds implications that extend beyond artificial intelligence, touching on our understanding of time and causality.

The “Arrow of Time” Effect and Its Discoveries

Recent research conducted by a team from École Polytechnique Fédérale de Lausanne (EPFL) and Goldsmiths, University of London, led to the discovery of this directional bias in language models. The researchers, Professor Clément Hongler and Jérémie Wenger, together with machine learning expert Vassilis Papadopoulos, sought to determine whether language models could generate stories in reverse, beginning with an ending and working backward. Their studies revealed a consistent pattern: LLMs are less adept at backward prediction, exhibiting a notable discrepancy in accuracy between predicting the next word and the previous one.

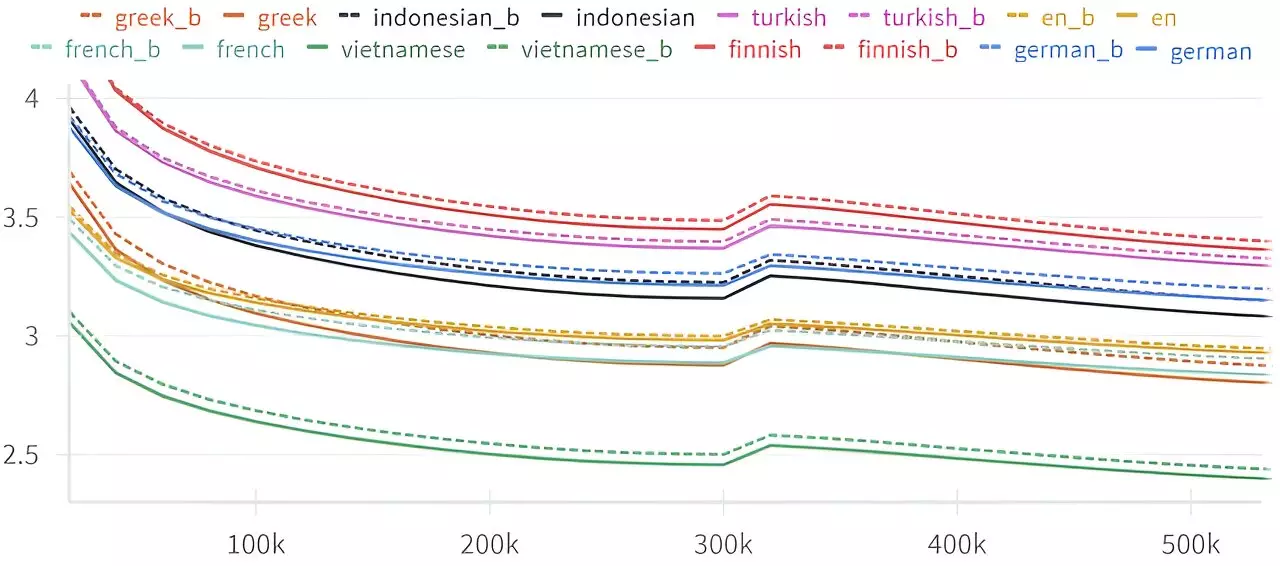

This bias raises critical questions. Why, in a linear progression where both tasks should theoretically parallel each other, do LLMs display this asymmetry? The researchers examined various architectures—including Generative Pre-trained Transformers (GPT), Gated Recurrent Units (GRU), and Long Short-Term Memory (LSTM) networks—and found that every tested model displayed an inherent preference for forward prediction.

The foundations of this inquiry link back to Claude Shannon, the pioneering figure in information theory. In his landmark 1951 work, Shannon pondered whether predicting the next letter in a sequence was as challenging as predicting the preceding letter. He concluded that while humans encounter more difficulty with backward predictions, the gap in performance was minimal. This raises fascinating considerations: despite theoretical parity, actual cognitive processing appears to favor a forward trajectory.

Hongler expands on this idea by suggesting that the sensitivity of LLMs to the time dimension signals deeper characteristics embedded within linguistic structures. The research indicates that this isn’t an isolated incident; rather, it reflects a universal property across languages and models. The researchers argue that understanding this property could have profound implications, paving the way for advanced models and possibly offering insights into detecting intelligence in other organisms or even artificial entities.

The implications of this study extend far beyond the confines of artificial intelligence. It prompts us to reconsider how we understand language itself—the way we communicate and comprehend narratives is intimately tied to our perception of time and causality. Language inherently carries a forward-moving structure, akin to how we experience events in real life. This observation could inform not only advancements in LLM development but also our understanding of human cognition.

Moreover, the finding raises tantalizing possibilities regarding the nature of intelligence and consciousness. By identifying this time-sensitive property, researchers can begin to explore the potential for detecting intelligence in other entities, whether biological or synthetic. Such a tool could vastly change how we approach the search for extraterrestrial life or the development of AI systems capable of nuanced understanding and interaction.

The Connection to the Passage of Time

The research also holds potential significance in exploring one of the enduring questions in physics: the nature of time itself. The alignment drawn between language processing and the passage of time suggests that our comprehension of causality and temporal flow may be emergent phenomena linked closely to how intelligent agents process information. As physicists and linguists alike grapple with the nature of time, this research presents a novel intersection of disciplines, potentially catalyzing new theories and insights.

In an era defined by rapid advancements in AI technologies, these findings regarding the “Arrow of Time” effect in language models illuminate a compelling narrative about the connection between language, time, and cognition. As researchers continue to probe the depths of this relationship, the potential applications could reshape both artificial intelligence and our fundamental understanding of what it means to process information. The work is not merely academic; it taps into the heart of human experience, reflecting our ongoing pursuit to decode the intricacies of language and the universe at large. As we stand on the cusp of these discoveries, we begin to understand that time is not merely a sequence of moments but a vital thread that weaves together the fabric of language, intelligence, and reality.

Leave a Reply