Quantum computers have shown promise in outperforming conventional computers in some information processing tasks, such as machine learning and optimization. However, their deployment on a large scale is hindered by their sensitivity to noise, leading to errors in computations. Quantum error correction has been proposed as a technique to address these errors by monitoring and restoring computations in real-time. Despite recent progress in this area, the implementation of quantum error correction remains experimentally challenging and comes with significant resource overheads.

An alternative approach to quantum error correction is quantum error mitigation, which works more indirectly by allowing error-filled computations to run to completion before inferring the correct result at the end. This method was introduced as a temporary solution until full error correction could be achieved. However, a study by researchers at various institutions highlighted the inefficiency of quantum error mitigation as quantum computers are scaled up in size.

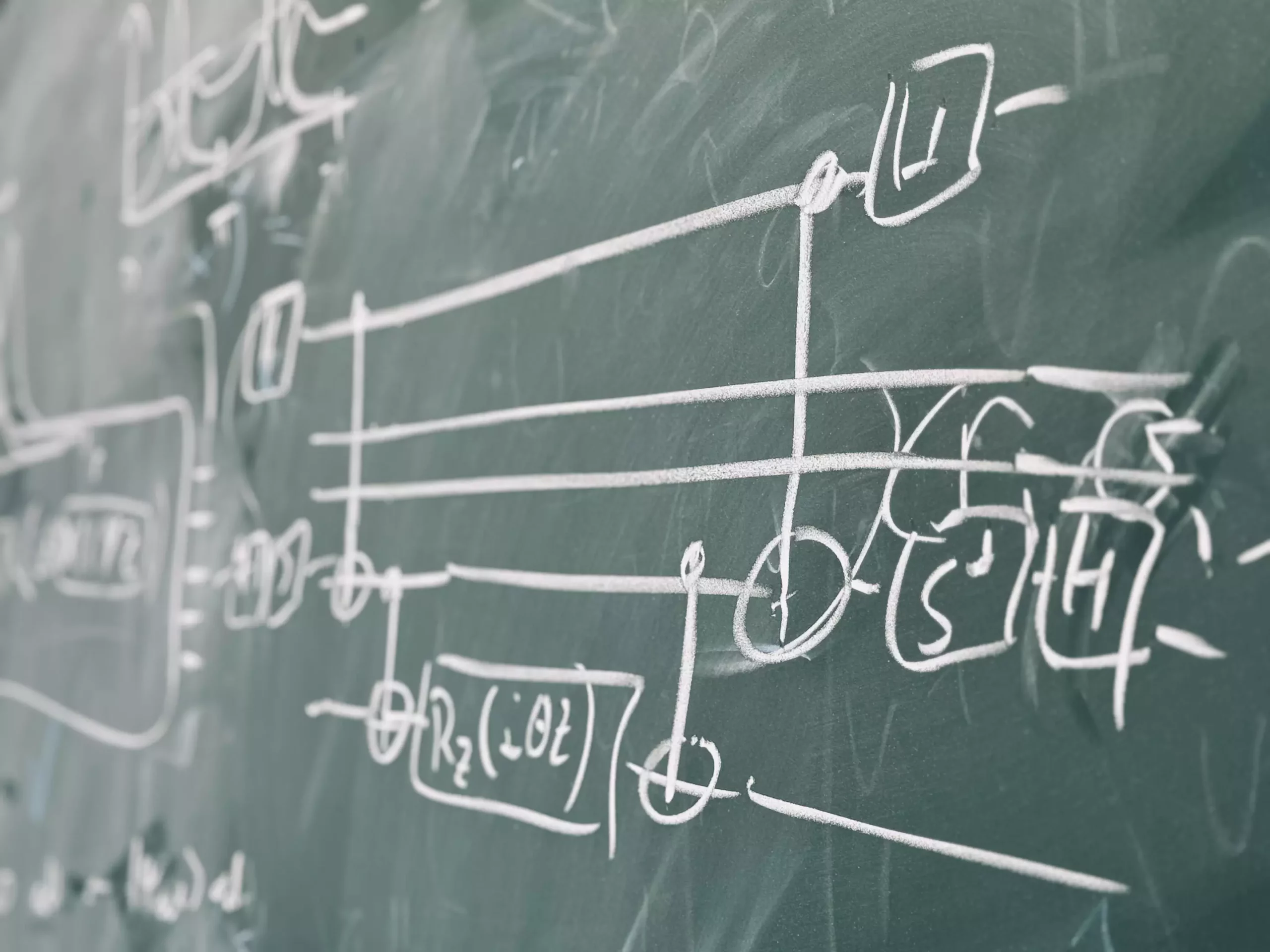

The research team led by Yihui Quek explored the boundaries of quantum error mitigation and found that as quantum circuits grow larger, the effectiveness of error mitigation decreases. One of the limitations they identified was with the ‘zero-error extrapolation’ scheme, which proved to be non-scalable due to the increasing noise in the system. Quantum gates in the circuits introduce errors at each layer, making it challenging to maintain accurate computations.

Quantum circuits consist of multiple layers of gates that advance computations, but noisy gates introduce errors that accumulate throughout the circuit. This leads to an inherent paradox where deeper circuits, necessary for complex computations, are also noisier and more error-prone. The study shows that certain circuits require an unfeasible number of runs to mitigate errors effectively, regardless of the specific algorithm used.

The findings suggest that as quantum circuits scale up, the effort and resources needed to run error mitigation increase significantly. This challenges the initial belief that error mitigation would be achievable within current experimental capabilities. The study serves as a wake-up call for quantum physicists and engineers to rethink existing error mitigation schemes and develop more efficient strategies.

While the study sheds light on the limitations of quantum error mitigation, the researchers aim to focus on potential solutions to overcome these challenges in future studies. Collaborators are already exploring combinations of randomized benchmarking and quantum error mitigation techniques to improve the efficiency of error correction on large-scale quantum computers. By developing new mathematical frameworks and strategies, researchers hope to advance the field of quantum error mitigation and enable the realization of quantum advantage without sacrificing scalability.

Leave a Reply