Imitation learning has long been considered a promising method for teaching robots how to perform everyday tasks with reliability and precision. However, the success of imitation learning frameworks heavily relies on detailed human demonstrations that provide the necessary data to reproduce specific movements using robotic systems. One common approach to collecting these demonstrations is through teleoperated systems, which allow humans to control the movements of robotic manipulators to accomplish specific tasks. Despite the potential of teleoperation systems, existing methods often struggle to effectively process and reproduce the complex and coordinated movements performed by humans.

Recently, researchers at the University of California, San Diego introduced Bunny-VisionPro, a groundbreaking teleoperation system designed to enable the control of robotic systems to complete bimanual dexterous manipulation tasks. This innovative system, outlined in a paper on the arXiv preprint server, aims to streamline the collection of human demonstrations for imitation learning. Xiaolong Wang, one of the co-authors of the paper, emphasized the need for advancements in bimanual teleoperation for robotics, citing the rarity of vision-based teleoperation systems that prioritize dual-hand control for tasks necessitating hand coordination.

The primary goal of the Bunny-VisionPro system is to serve as a versatile teleoperation platform that can adapt to various robots and tasks, simplifying the process of gathering demonstrations to train robotics control algorithms. Wang highlighted the system’s capability to make teleoperation and demonstration collection intuitive and immersive, comparing it to the experience of playing a virtual reality game. Bunny-VisionPro allows human operators to control dual robot arms and multi-fingered hands in real-time, providing high-quality data for imitation learning.

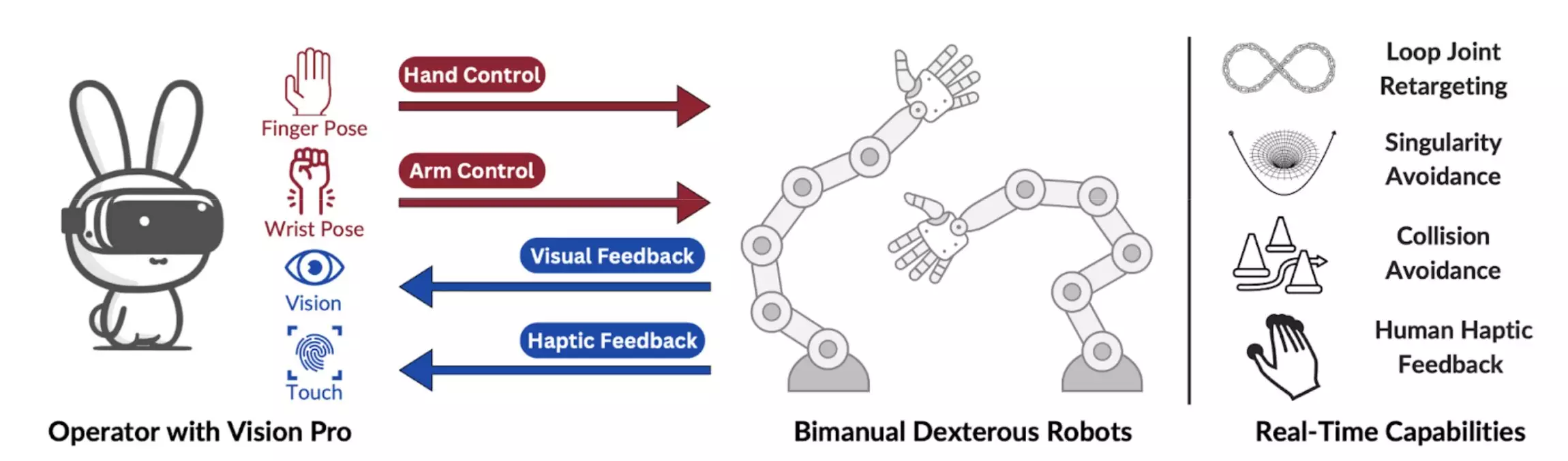

Bunny-VisionPro comprises three main components: an arm motion control module, a hand and motion retargeting module, and a haptic feedback module. The arm motion control module addresses singularity and collision issues by mapping human wrist poses to the robot’s end-effector pose. The hand and motion retargeting module handles the mapping of human hand poses to robot hand poses, including support for loop-joint structures. Finally, the haptic feedback module enhances the immersive experience by transferring robot tactile sensing to human haptic feedback through a combination of algorithms and hardware design.

One of the key advantages of Bunny-VisionPro is its ability to facilitate safe and real-time control of a bimanual robotic system. Unlike previous solutions, this system integrates haptic and visual feedback, enhancing the immersive nature of the teleoperation process for human users and improving the system’s overall success rates. Wang emphasized the significance of achieving a balance between safety and performance, enabling precise control of robot arms and hands with minimal delay, collision avoidance, and singularity handling, all critical factors for real-world robotic applications.

The development of Bunny-VisionPro could potentially revolutionize the use of teleoperation for gathering demonstrations for imitation learning frameworks. As the system gains traction, it may find applications in robotics labs worldwide, inspiring the creation of similar immersive teleoperation systems. Looking ahead, Wang and his colleagues plan to enhance the manipulation capabilities of Bunny-VisionPro by leveraging the robot’s tactile information to achieve greater precision and adaptability in future iterations.

Leave a Reply