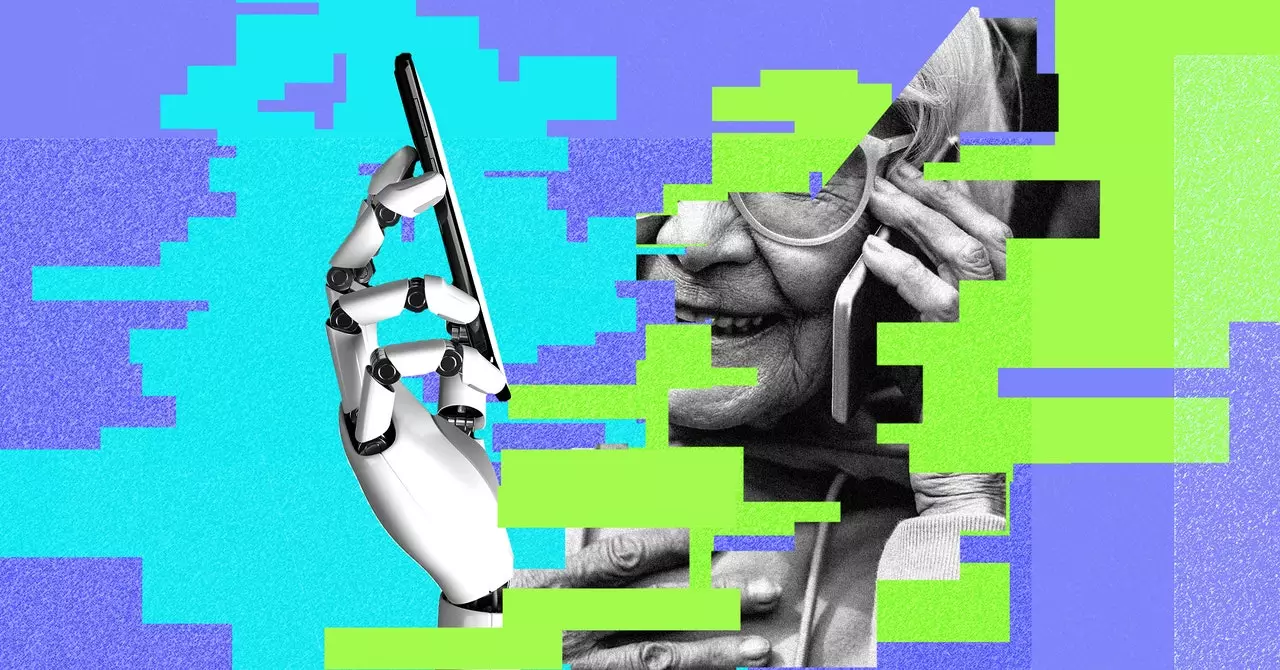

The rise of artificial intelligence technology has brought about a new wave of scams, particularly in the form of AI voice cloning. Scammers can now create fake audio of people’s voices, making it difficult to distinguish between a real person and a computer-generated clone. These AI voice clones are trained on existing audio clips of human speech, allowing scammers to imitate almost anyone, including your loved ones. With the latest models even being able to speak in multiple languages, the threat of falling victim to an AI voice cloning scam is becoming more prevalent.

When faced with an urgent and unexpected call requesting money or personal information, it is crucial to take precautionary steps to avoid falling victim to an AI voice cloning scam. One effective strategy is to hang up and call back the person who supposedly contacted you, using a verified number. This simple step can help confirm the authenticity of the call and prevent you from being deceived by a scammer posing as a loved one.

In addition to calling back the person who contacted you, it is essential to verify the caller’s identity through other means of communication. By using a different line of communication, such as video chat or email, you can confirm whether the individual on the other end is who they claim to be. Crafting a safe word that only you and your loved ones know can also serve as an extra layer of security, especially for individuals who may be difficult to contact in case of an emergency.

It is important to be mindful of any unusual requests for money or personal information over the phone. Scammers often create a sense of urgency and exploit your emotions to manipulate you into acting quickly without thinking rationally. By pausing to ask personal questions or requesting specific information that only a loved one would know, you can weed out potential scammers and protect yourself from falling victim to an AI voice cloning scam.

Taking a moment to reflect on a situation and resisting the urge to act impulsively can be the key to avoiding scams. Scammers rely on triggering heightened emotions and exploiting human behavior to deceive their victims. By staying vigilant and approaching unexpected calls with caution, you can minimize the risk of becoming a target of an AI voice cloning scam. Remember, your safety and security should always take precedence, so trust your instincts and take the necessary steps to protect yourself from potential threats.

Leave a Reply